Table of Contents

- 1 Gradient Descent Optimization Explained: A Deep Dive

- 1.1 Understanding Gradient Descent Optimization

- 1.1.1 What Is Gradient Descent?

- 1.1.2 Why Is It Important?

- 1.1.3 The Math Behind Gradient Descent

- 1.1.4 Types of Gradient Descent

- 1.1.5 Choosing the Right Learning Rate

- 1.1.6 Convergence and Stopping Criteria

- 1.1.7 Challenges and Limitations

- 1.1.8 Advanced Variants of Gradient Descent

- 1.1.9 Practical Tips for Using Gradient Descent

- 1.1.10 Real-World Applications

- 1.2 Conclusion: The Power of Gradient Descent

- 1.3 FAQ

- 1.1 Understanding Gradient Descent Optimization

Gradient Descent Optimization Explained: A Deep Dive

Ever wondered how machines learn? At the heart of many machine learning algorithms lies a powerful technique called gradient descent optimization. It’s like the secret sauce that helps models improve over time. But what is it, really? And how does it work? Let’s dive in and explore gradient descent optimization, from its basic principles to its more complex applications.

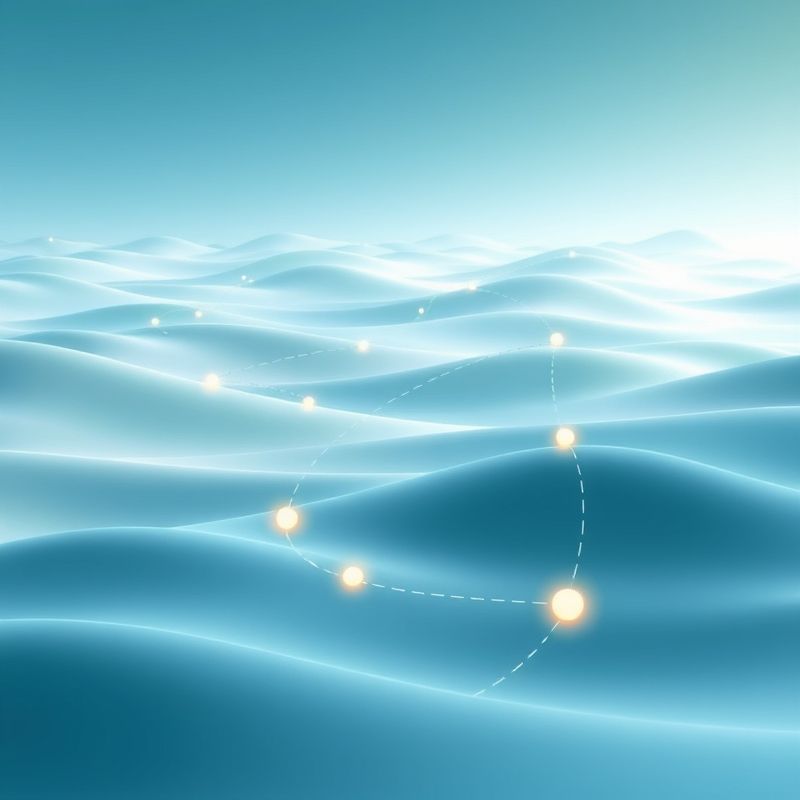

When I first heard about gradient descent, I was a bit skeptical. How could something so simple be so powerful? But as I delved deeper, I realized it’s all about finding the minimum point in a landscape of possibilities. It’s like hiking down a valley—you want to reach the bottom, but you can only see a few steps ahead. That’s gradient descent in a nutshell.

In this article, we’ll break down gradient descent optimization into digestible bits. We’ll look at what it is, how it works, and why it’s so crucial in machine learning. By the end, you’ll have a solid understanding of this foundational concept. So, grab a coffee, and let’s get started!

Understanding Gradient Descent Optimization

What Is Gradient Descent?

Gradient descent is an optimization algorithm used to minimize the cost function in machine learning and deep learning. Think of the cost function as a bowl-shaped curve; gradient descent helps find the lowest point of this curve, which represents the best parameters for your model.

The basic idea is to start at a random point on the curve and then iteratively move towards the minimum point. At each step, the algorithm calculates the gradient (the slope of the curve) and moves in the opposite direction of the gradient. This process continues until it reaches the minimum point, or at least a point that’s close enough.

Why Is It Important?

Gradient descent is crucial because it’s one of the most efficient ways to optimize machine learning models. It’s used in a wide range of applications, from training neural networks to optimizing logistic regression models. Without gradient descent, many of the machine learning algorithms we use today wouldn’t be nearly as effective.

But is this the best approach? Let’s consider the alternatives. There are other optimization algorithms, like Newton’s method or conjugate gradient, but gradient descent remains popular due to its simplicity and effectiveness. It’s a balance between complexity and performance that makes it a go-to choice for many practitioners.

The Math Behind Gradient Descent

Let’s get a bit technical. The gradient descent algorithm updates the parameters of the model iteratively. The update rule is:

θ = θ – η * ∇J(θ)

Where:

- θ is the parameter vector.

- η is the learning rate, a hyperparameter that controls the step size.

- ∇J(θ) is the gradient of the cost function with respect to the parameters.

The learning rate is a critical factor. Too high, and the algorithm might overshoot the minimum; too low, and it might take forever to converge. Finding the right learning rate is often a matter of trial and error.

Types of Gradient Descent

There are three main types of gradient descent:

- Batch Gradient Descent: Uses the entire dataset to compute the gradient at each step. It’s accurate but can be slow for large datasets.

- Stochastic Gradient Descent (SGD): Uses one data point at a time to compute the gradient. It’s faster but can be noisy.

- Mini-Batch Gradient Descent: A compromise between the two, using a small subset of the data at each step. It balances speed and accuracy.

Each type has its pros and cons. Batch gradient descent is reliable but slow. SGD is fast but can be erratic. Mini-batch gradient descent offers a good middle ground, making it a popular choice in practice.

Choosing the Right Learning Rate

The learning rate is a hyperparameter that determines the size of the steps taken towards the minimum. A high learning rate can lead to overshooting the minimum, while a low learning rate can result in slow convergence. Finding the optimal learning rate often involves experimentation and tuning.

I’m torn between using a fixed learning rate and an adaptive one. Fixed learning rates are simpler, but adaptive learning rates can adjust on the fly, potentially leading to better performance. Ultimately, the choice depends on the specific problem and dataset.

Convergence and Stopping Criteria

Gradient descent algorithms typically stop when they reach a predefined number of iterations or when the change in the cost function falls below a certain threshold. This is known as convergence. Ensuring that the algorithm converges to a good solution is crucial for the model’s performance.

Maybe I should clarify that convergence doesn’t always mean finding the global minimum. In complex landscapes, the algorithm might get stuck in a local minimum. Techniques like simulated annealing or adding noise can help escape these local minima.

Challenges and Limitations

Gradient descent isn’t without its challenges. One major issue is the vanishing gradient problem, where the gradients become very small, making it difficult for the algorithm to update the parameters effectively. This is especially problematic in deep neural networks.

Another challenge is the exploding gradient problem, where the gradients become very large, leading to unstable updates. Techniques like gradient clipping and normalization can help mitigate these issues.

Advanced Variants of Gradient Descent

Over the years, several advanced variants of gradient descent have been developed to address its limitations. Some popular ones include:

- Momentum: Adds a fraction of the previous update to the current update, helping to accelerate convergence.

- Nesterov Accelerated Gradient (NAG): A variation of momentum that looks ahead to where the parameters are going to be, providing a correction.

- AdaGrad: Adapts the learning rate for each parameter based on the historical gradients.

- RMSprop: Adapts the learning rate based on a moving average of the squared gradients.

- Adam: Combines the ideas of momentum and RMSprop, providing adaptive learning rates and bias correction.

Each of these variants has its own strengths and weaknesses, and the choice of which one to use depends on the specific problem and dataset.

Practical Tips for Using Gradient Descent

Here are some practical tips for using gradient descent in your machine learning projects:

- Start with a simple implementation and gradually add complexity as needed.

- Experiment with different learning rates and see how they affect convergence.

- Use techniques like learning rate schedules to adjust the learning rate over time.

- Monitor the cost function and gradients to detect issues like vanishing or exploding gradients.

- Consider using advanced variants like Adam or RMSprop for better performance.

Real-World Applications

Gradient descent is used in a wide range of real-world applications. For example, it’s a key component in training deep neural networks for image and speech recognition. It’s also used in recommendation systems, natural language processing, and even in optimizing financial models.

The versatility of gradient descent makes it a fundamental tool in the machine learning toolkit. Whether you’re building a simple linear regression model or a complex deep learning architecture, gradient descent is likely to play a role.

Conclusion: The Power of Gradient Descent

Gradient descent optimization is a powerful and versatile technique that lies at the heart of many machine learning algorithms. By understanding how it works and its various types and variants, you can better optimize your models and achieve superior performance.

As you dive deeper into the world of machine learning, remember that gradient descent is just one tool in your toolkit. It’s a powerful one, but it’s not the only one. Experiment with different optimization algorithms and see which one works best for your specific problem. And always keep learning—the field of machine learning is constantly evolving, and there’s always more to discover.

FAQ

Q: What is the difference between batch gradient descent and stochastic gradient descent?

A: Batch gradient descent uses the entire dataset to compute the gradient at each step, making it accurate but slow. Stochastic gradient descent uses one data point at a time, making it faster but potentially noisier.

Q: How do I choose the right learning rate for gradient descent?

A: Choosing the right learning rate often involves experimentation. Start with a reasonable guess and adjust based on how quickly the algorithm converges. Too high a learning rate can cause overshooting, while too low a learning rate can result in slow convergence.

Q: What are some advanced variants of gradient descent?

A: Some advanced variants of gradient descent include Momentum, Nesterov Accelerated Gradient (NAG), AdaGrad, RMSprop, and Adam. Each has its own strengths and weaknesses, and the choice depends on the specific problem and dataset.

Q: How can I deal with the vanishing gradient problem?

A: Techniques like gradient clipping, normalization, and using advanced variants like Adam can help mitigate the vanishing gradient problem. Experimenting with different architectures and initialization methods can also be beneficial.

@article{gradient-descent-optimization-explained-a-deep-dive,

title = {Gradient Descent Optimization Explained: A Deep Dive},

author = {Chef's icon},

year = {2025},

journal = {Chef's Icon},

url = {https://chefsicon.com/gradient-descent-optimization-explained/}

}